I cannot in good conscience sign a nondisclosure agreement or a software license agreement. For years I worked within the Artificial Intelligence Lab to resist such tendencies and other inhospitalities, but eventually they had gone too far: I could not remain in an institution where such things are done for me against my will. So that I can continue to use computers without dishonor, I have decided to put together a sufficient body of free software so that I will be able to get along without any software that is not free. I have resigned from the AI Lab to deny MIT any legal excuse to prevent me from giving GNU away.

— Richard Stallman, Why I Must Write GNU

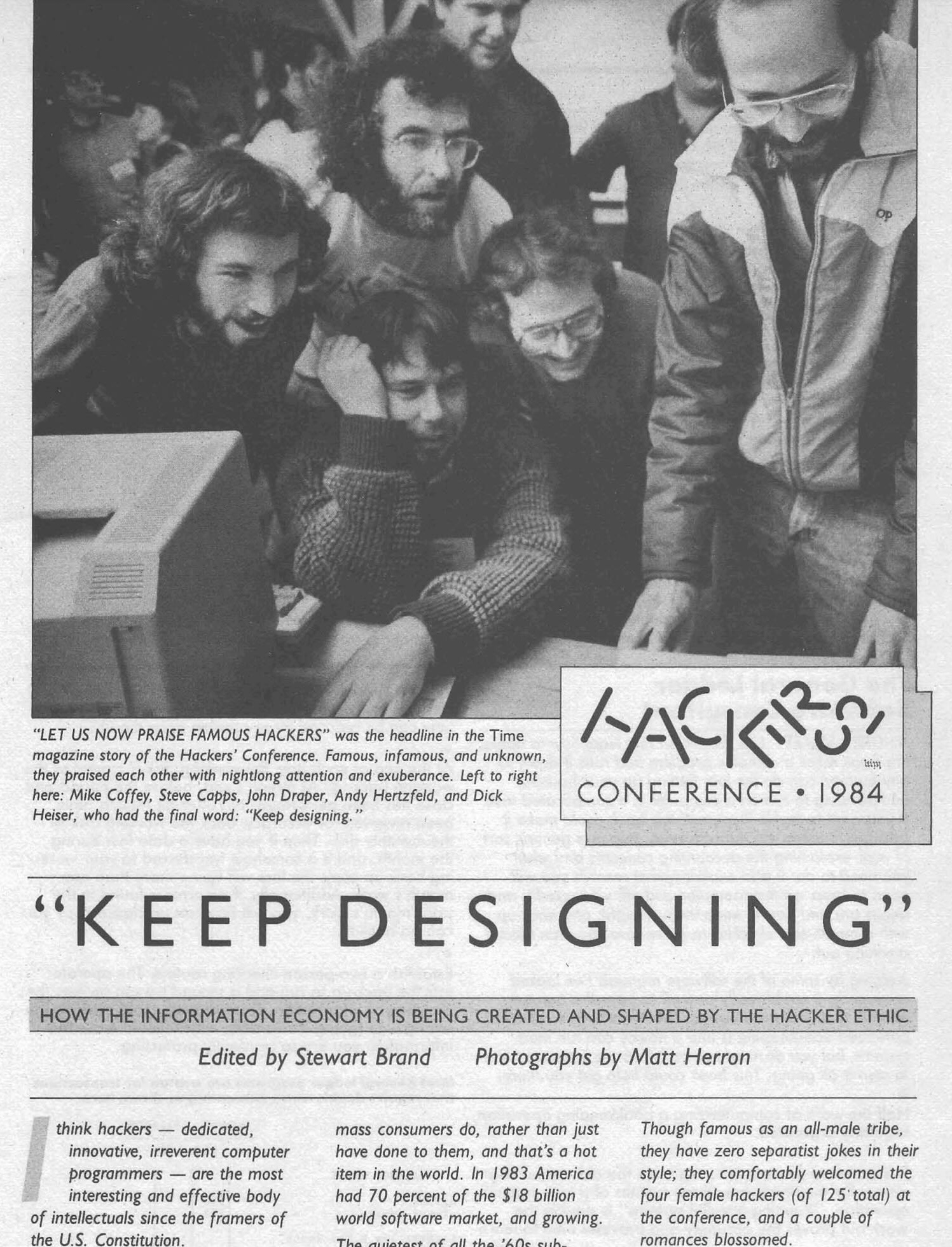

In 1983, Richard Stallman announced the start of the GNU project. He aspired to develop a new operating system, but although his declaration mentions this engineering goal, it was not just a technical proposal. This act required him to quit his job as a prominent researcher at MIT, and he cites a political reason for the project’s inception: free software. Here, “free” means users have the ability to see and edit the source code of the software they use. The next year, at the 1984 Hacker’s Conference, Stallman declared his ambitions had grown even larger. He wanted more than just a free operating system. He now sought “to make all software free.”

By focusing their efforts on this one goal for the last four decades, Stallman and other proponents of free software have won a near-total victory over proprietary code. With 2015's death of Internet Explorer, the important bits of every browser worth mentioning have become open source. Anyone can read and modify the code of the most popular operating system in the world, Android. (Its locked-down competitor, iOS, enjoys a worldwide popularity of just 15%.) Microsoft, the original arch-nemesis of the free software movement, now runs GitHub, NPM, and the most widely used code editor, VSCode, which they distribute for free. While many successfully run their personal computer on completely free or open source software, no one has done the same with completely proprietary code. When you visit your bank’s website, check your email, or browse your friend’s Instagram feed, you at every layer of the stack depend mostly on open source software. Even when programmers create small slivers of proprietary code, they still need open source software to write it.

Visit Stallman’s personal website today, however, and you won’t find much evidence of this overwhelming victory. Instead, he posts urgent declarations to avoid nearly every tech company’s products, whether that’s Uber, Facebook, Amazon, or Zoom, citing proprietary software, mass surveillance, and censorship. Stallman demands his readers retreat from modern technological life and the ubiquitous apps and devices that constitute it. This doesn’t sound like a victory march. Instead, Stallman describes how we face more peril than ever before. This presents an awkward contradiction: if we have access to more source code than ever before in human history, why does Stallman see such grave dangers to our digital freedoms?

I worry over these dangers too. Mass surveillance and the far-reaching powers of technology platforms scare me. However, Stallman and I would resolve this contradiction — between the widespread availability of source code and the ever-growing power of technology companies — in entirely different ways. Stallman would say that while the public has won plenty of source code, this success is not total, and further, much of the available code isn’t free software proper because its authors license it in a way that allows proprietary derivative works. For him, the danger originates in the numerous pockets of proprietary software that still thrive today. In this post, I would like to present an alternative theory: source code access does not guarantee digital freedoms. In fact, by fixating on source code access, Stallman’s free software principles obscure much of what actually makes technology oppressive, serve as a pressure relief valve for frustrated programmers who might otherwise mount substantive opposition to the technology industry, and, worst of all, limit what kinds of alternatives we can imagine.

When reading about these principles, you’ll find Stallman repeats a single word again and again throughout all his theories of digital freedom: "user". This user holds so much importance for Stallman, he bases the entire definition of free software upon it.

Free software is software that gives you the user the freedom to share, study and modify it. We call this free software because the user is free.

But although I can find endless essays hashing and rehashing what “freedom” specifically means in the context of free software, the similarly ubiquitous user receives little attention of its own. Stallman assumes the reader already understands the meaning of this user. Despite the ambiguity, he invariably defines the seemingly specific “freedom” in terms of this user. If freedoms are always user freedoms, but we don’t first examine this user, then we build our understanding of freedom on a brittle foundation.

Stallman had countless other words to choose from here. He could have emphasized just “digital rights” more generally, he could have used another word like “people” or “customer” or “programmer”, or he could have even just said “rights” and “freedom” without qualifying them. Instead, he specifically emphasizes user rights and user freedoms. In contrast to these other possibilities, “user” implies that the relationship between human and technology is one of use, that is, the technology is a tool. Stallman fears proprietary code because it makes software a less useful tool. Free software advocates provide countless examples of this feeling. Perhaps most canonically, this fear inspired Stallman to create free software in the first place: it all started when he couldn’t make a printer at MIT send a warning email if it jammed, because the manufacturer refused to share the printer’s code with him. Since then, Stallman’s Free Software Foundation has protested DRM in software tools because “DRM creates a damaged good; it prevents you from doing what would be possible without it.” They’ve fought for the right to install custom code on tractors because a closed software platform “makes it nearly impossible to repair certain older models when manufacturers decide to no longer maintain the software, which forces farmers into purchasing new equipment.” At their core, all these arguments concern the risk that a computer becomes less useful. I agree we should act against tractor manufacturers who use software to limit tractor repairability, but because the Free Software Foundation spotlights the utility or lack of utility of tools, they tend to ask only about technological concerns like the mechanism by which a tractor prevents third-parties from installing new code. Free software does not address the conditions that made it desirable for the tractor manufacturer to do this in the first place. This leaves them playing legal whack-a-mole with each new threat that crops up — even if they win the right to customize tractor software, there will always be some other fight that they must suddenly rally for. They take a reactive approach, not a proactive one.

By centering user freedom while failing to analyze or define the user, free software principles also completely disregard what we should do when this user wields technology to do something horrible. If DoorDash or Uber publish every line of their code base, Stallman’s theories would have no argument against their mistreatment of drivers. In fact, free software’s principles would say these companies have the right to freely use and edit any software I release as an independent developer to further their commercial goals — in this situation, DoorDash and Uber become the users whose rights Stallman is championing. And yet, even though I must give them my code, would these companies publishing their source code diminish their power in any way? These platforms certainly need that code to operate, but their power does not originate from it. You could ask the same questions about companies that already release their source code to the public: if releasing this code is sufficient for freedom, then on what basis can we complain about Chrome’s monopoly? Why does it matter that they kneecap adblock APIs, or add ad targeting features? We could also move beyond the commercial world, and look at a more extreme case: what should I do if I do not want the military to use my code? Stallman does not ask the identity of the user because it would bring him face-to-face with these complexities that conflict with the simplified world of free software. Centering user freedom only makes sense when you don’t ask who the user is.

Given that Stallman’s arguments don’t effectively challenge the power of the companies he derides, we probably shouldn’t be surprised that those companies would agree with his framing of freedom as a matter of maximizing utility. In fact, technology companies broadcast this same idea when they want to win the public’s consent to distribute their products. When they describe people as “users” and their product as a “tool”, they obscure the intent, responsibility, and power they have as the creator of that technology. Sure, Stallman and his sworn enemy Mark Zuckerberg might squabble about source code and licensing, but the frameworks through which they understand technology itself mirror and inform each other. Stallman doesn’t just fail to unveil this process, he wraps another blindfold over our eyes. Businesses adopted hacker ideals and brought free software the near-total success it enjoys today not merely because they were able to corrupt it, but rather because its underlying ideals supported their monopolistic practices from the very beginning.

This philosophical mirror between major technology companies and free software proponents has been with us for some time. At the 1984 Hacker’s Conference, when a dozen Apple employees (almost a tenth of all conference attendees by my count) listened to Stallman announce his ideas, Apple had long grown out of its tiny startup phase. Almost half a decade prior, it IPOed at over $1.8 billion, and by the time of the conference had many thousands of employees. That same year, Apple aired their famous 1984 Super Bowl ad where access to a tool — specifically a hammer thrown at a screen — brings freedom to a crowd of drones oppressed by some big-brother-like figure. But it wasn’t just Apple employees and Stallman at the conference with this utilitarian ideal. Steven Levy, in the book that kicked off the Hacker’s Conference, describes the first tenet of the hacker ethic as “access to computers”, a phrase perhaps inspired by the subtitle to Hacker Conference organizer Stewart Brand’s Whole Earth Catalog: “access to tools.” Perhaps the work of conference attendees Ted Nelson, Roger Gregory, and Mark Miller most clearly expressed this tool oriented mindset: they worked on Xanadu, a writing app perpetually in the prototype phase that nonetheless inspired the creation of the web. In his 1974 book Computer Lib, Nelson describes his general mission for Xanadu, saying “by Computer Lib I mean simply: making people freer through computers. That’s all.” Xanadu is not just for mere writing; Nelson hopes that maybe, just maybe, giving users access to a mind-expanding computer tool will allow “some precocious grade-schooler or owlish hobbyist” — notably, an individual working alone — to find a strategy that solves the coming crisis of thermonuclear war, overpopulation-induced famines, and a proto-climate-change “winter of the world” where humanity poisons everything. When we think utility alone can guarantee freedom, we find it easy to believe that we can just give people access to useful digital tools, and they can save themselves. This fixation on utility and usefulness doesn’t just appear in Stallman’s work. Rather, it forms one of the core pillars of hacker culture, and holds up its corporate and anti-corporate wings alike.

I’m far from the first to ask about why free software’s massive success failed to secure the freedoms that Stallman hoped for. Chris Dixon asks this same question in his book Read Write Own, published earlier this year. However, when hackers like Dixon consider this question, they tend to debate various technological changes they can make to free software programs, rather than think about problems with the overall framework of utilitarian thinking itself. I agree completely with him when he laments, at least in his public statements, the way “corporate networks” like YouTube and Facebook limit who can programmatically access posts via APIs, a kind of ability he calls “remixing.” But rather than asking what makes it desirable for these companies to add these limits (perhaps one of Dixon’s colleagues at Andreessen Horowitz would know) or perhaps reconsidering why he thinks software could ever guarantee these kinds of freedoms in the first place, he instead proposes this will be fixed with the right technology: blockchains.

Where corporate networks failed, blockchains provide a solution. Blockchain networks make strong commitments that the services they offer will stay remixable, without needing anyone's permission, in perpetuity.

— Chris Dixon, Read Write Own

Dixon is not the only idealist today who argues new technological designs can solve issues with freedoms and rights. Hackers repeat this over and over again — some critics of technology call this approach “techno-solutionism.” We see the same pattern repeated by developers of decentralized social networks like Mastodon and Bluesky, who hope that designing the right protocol can save the next Twitter if it sells to a tyrannical owner. In particular, recent debates among hackers over whether Bluesky’s protocol can be considered “truly decentralized” or not miss the larger question of what else we need beyond decentralization to achieve this political goal in the first place. Proponents of "end-user programming" repeat this yet again when — inspired by Hacker Conference attendee Bill Atkinson — they say we have a “moral imperative” to design a programming environment accessible to nontechnical users, preventing “a world where only a tiny elite of high priests (aka “programmers”) have control over what happens in our computing lives.” These technological discussions easily tempt us because, as programmers, we find it reassuring to think that major issues can be solved with the right engineering. This is exactly why we are drawn towards thinking of freedom as a matter of utility, and the ubiquitous user — we can debate utility with our well-practiced technological vocabulary. There’s nothing wrong with thinking about utility inherently, and it’s a necessary part of the process of writing code. However, these hackers don’t just apply this perspective to their database performance measurements! They apply it to freedom. Fixating on this technological debate makes it impossible to ask if maximizing utility can actually secure freedoms, an assumption that can’t be questioned technologically by comparing metrics or evaluating algorithms. In this way, hacker ideology narrows the scope of how technology ethics can even be discussed. It distracts programmers frustrated at their world with an endless loop of technological design changes that won’t change anything material.

Dixon demonstrates another common theme within hacker culture — he hopes blockchain networks can make some political guarantee “in perpetuity.” This instinct, again, comes naturally to us programmers. When building a distributed system, I try to write code certain to work under any conditions, whether that’s a temporary network hiccup or a datacenter literally melting from losing all its coolant. However, when we apply the same techniques to something like the freedom to share information, we get blockchains and free software — both succeed in prescribing a formula or algorithm, but in neither case is a freedom itself actually guaranteed, and the result is brittle and susceptible to corporate capture. Some things cannot be engineered.

Rather than continue to debate the utility of various approaches to giving users access to tools, perhaps it’s time to instead ask: what are we missing that can’t be provided by tools at all? The hacker mindset encourages us to seek freedom from technology only by maximizing the usefulness of that technology. We cannot even ask how to build trust or solidarity with each other, because these can’t come from simply distributing the right tools. Instead, we waste our time attempting to codify these relationships into protocols that can be engineered into existence even in the absence of trust. The alternative is certainly not to reject the use of computers, nor can do I want to condemn the generous programmers who, by releasing their source code publicly, create the digital world we live in. I see a beautiful dignity in releasing your code to the world because you love it and want to share it. But we limit our political imagination when we believe giving people access to a particularly designed tool can guarantee freedoms. I do not reject the hacker ethic outright. I instead hope that one day we will see what lies beyond it, and start to ask the questions we have missed for the last half century.

You're reading Five Decades of Access to Tools, an ongoing series.

-

part 2: Beyond Free Software (you are here)