“Your code is your understanding of the problem you’re exploring. So it’s only when you have your code in your head that you really understand the problem.” — Paul Graham

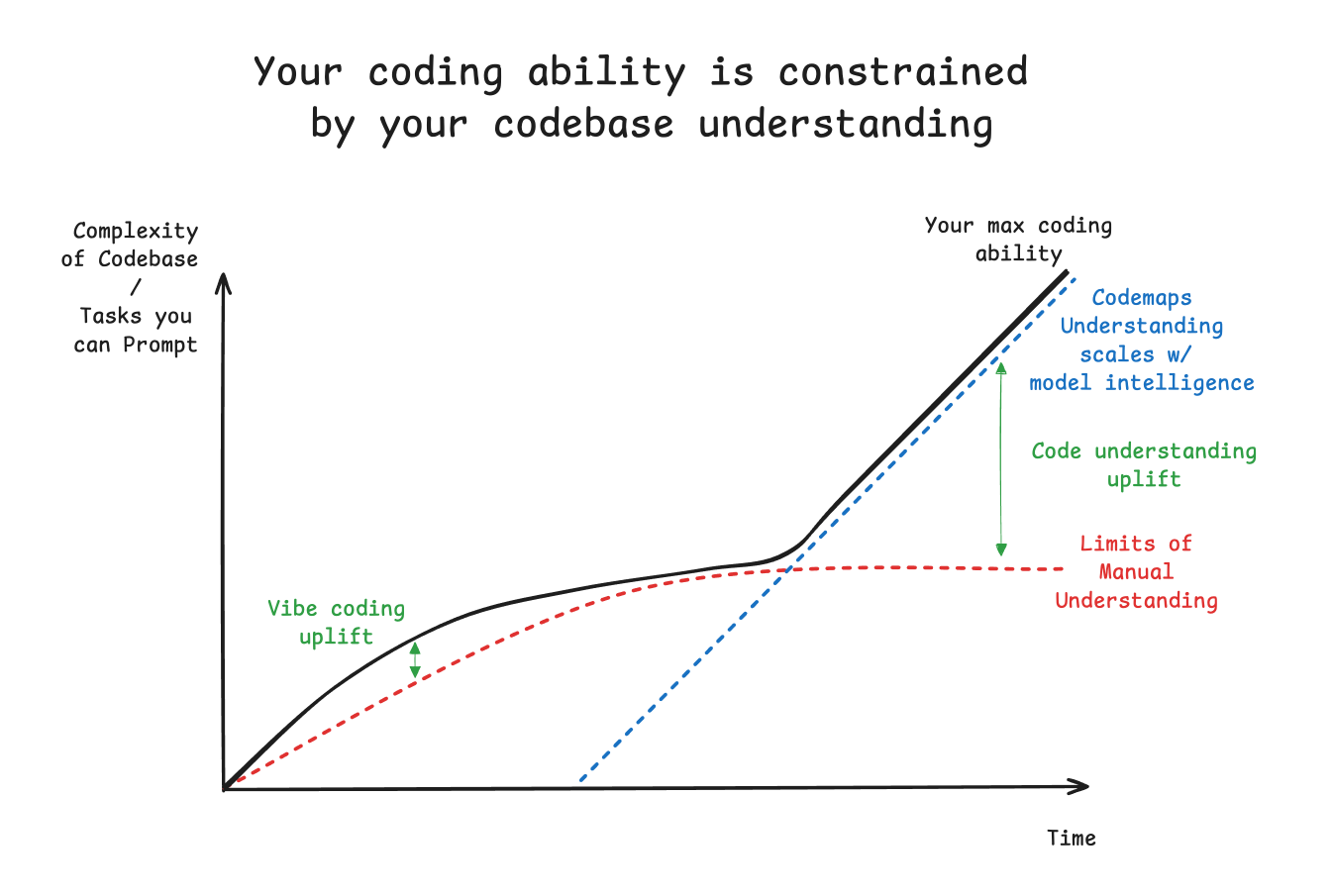

Software development only becomes engineering with understanding. Your ability to reason through your most challenging coding tasks is constrained by your mental model of how things work — in other words, how quickly and how well you onboard to any codebase for solving any problem. However most AI vibe coding tools are aimed at relieving you of that burden by reading → thinking → writing the code for you, increasing the separation from you and your code. This is fine for low value, commodity tasks, but absolutely unacceptable for the hard, sensitive, and high value work that defines real engineering.

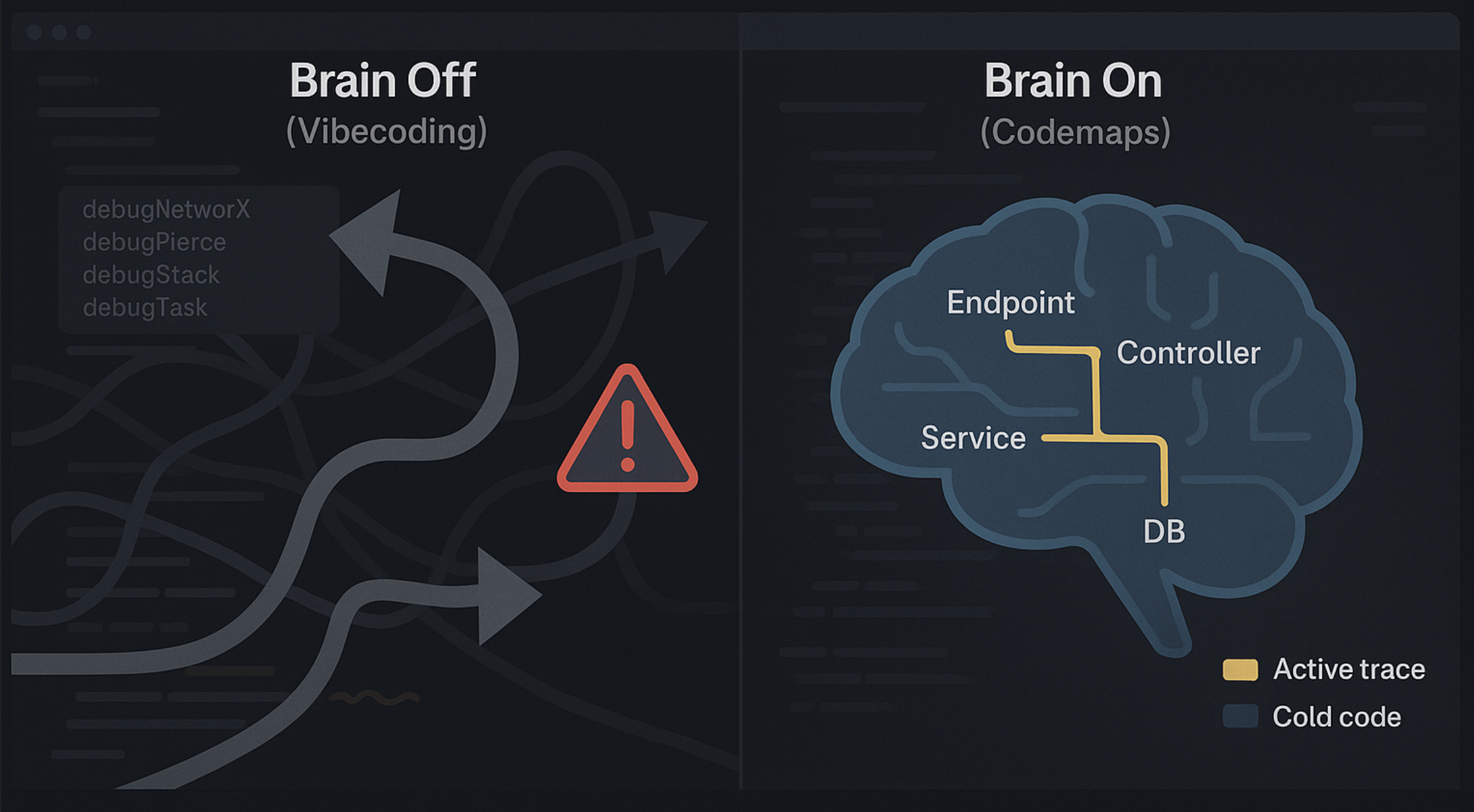

We all need more AI that turns your brain ON, not OFF.

Today we are announcing Windsurf Codemaps, which are first-of-its-kind AI-annotated structured maps of your code, powered by SWE-1.5 and Claude Sonnet 4.5. Building on our popular work from DeepWiki and Ask Devin, Codemaps is the next step in hyper-contextualized codebase understanding, grounded in precise code navigation.

Why Codemaps?

Every engineering task — debugging, refactors, new features — starts with understanding. Great engineers aren’t just good at writing code; they’re good at reading it, building mental models that span files, layers, and systems.

But modern codebases are sprawling: hundreds of files, multiple services, dense abstractions. Based on own experience and deep conversations with our customers across the Fortune 500, even top engineers spend much of their deep-work time finding and remembering what matters.

It’s a huge tax on productivity:

- New engineers take 3–9 months to fully ramp (estimates)

- Senior engineers lose 5+ hours per week onboarding others (source)

- Stripe found legacy maintenance to be the #1 drag on productivity on their customers (source)

This is the frontier that AI coding tools haven’t yet solved. Onboarding isn’t even a onetime cost, you pay it every time you switch context and codebases. The faster and better you understand your codebase, the faster and better you’ll be able to fix it yourself, or prompt agents to do it.

Until today, the standard approach by Copilot, Claude Code, Codex, and even Windsurf Cascade, was to have you ask questions of a generalist agent with access to your code in a typical chat experience. But those solutions don’t solve focused onboarding and strongly grounded navigation to onboard, debug, and better context engineer for your codebase.

At Cognition, we’ve been investing far more deeply in understanding:

- Ask Devin introduced focused agents that reason through real codebases.

- DeepWiki made that reasoning transparent, turning your repos into browsable, linked documentation.

- Windsurf brought these capabilities into the IDE.

Codemaps is our next investment in tooling that makes engineers the best versions of themselves.

Our solution: Just-in-Time mapping for any problem

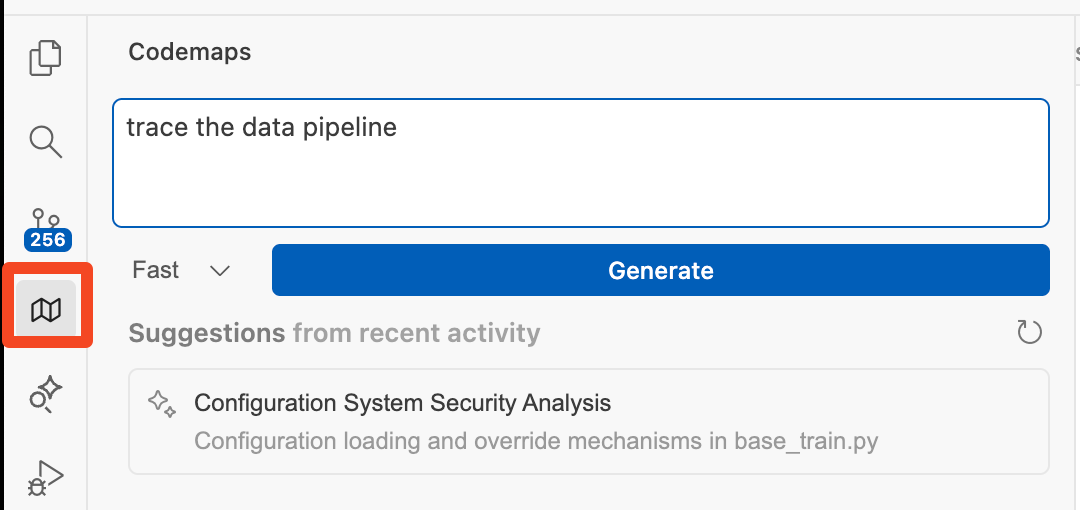

When you first open Codemaps (click the new maps icon or Cmd+Shift+C in Windsurf) with a codebase opened in Windsurf, you can enter in a prompt for the task you are trying to do, or take one of the automatic suggestions. You can choose a Fast (SWE-1.5) or Smart (Sonnet 4.5) model to generate your Codemap. Every Codemap is snapshots your code and respects ZDR.

Based on our demos to customers, you will experience Codemaps best on your own codebase and asking a question about how or where some functionality works. In our dogfooding, we find particular effectiveness tracing through client-server problems or a data pipeline or debugging auth/security issues:

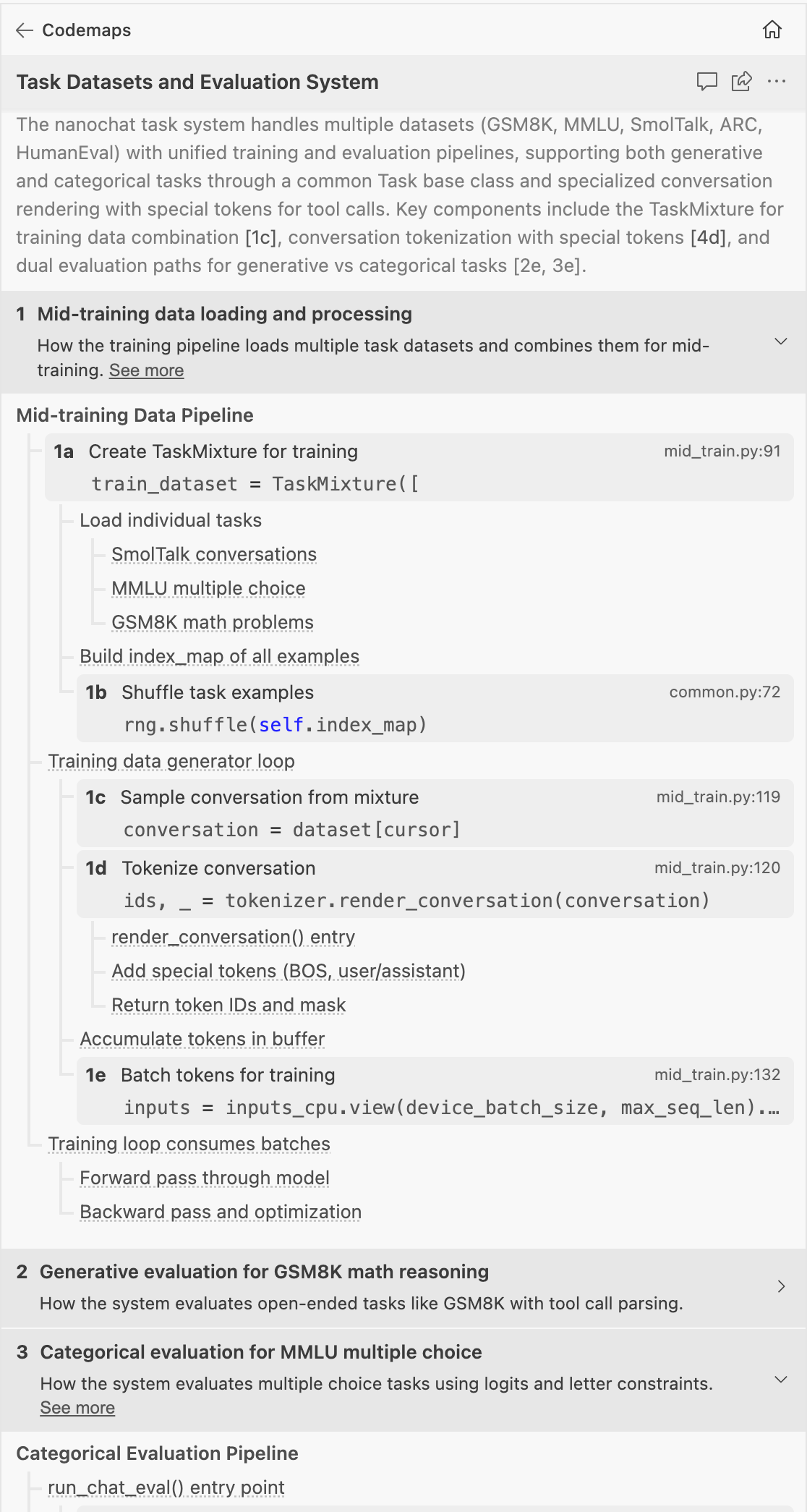

If all you wanted was to quickly jump through grouped and nested parts of your code that related to your question, this is already an improvement compared to asking the same question in Cascade, where answers are not as densely linked to the exact lines of code.

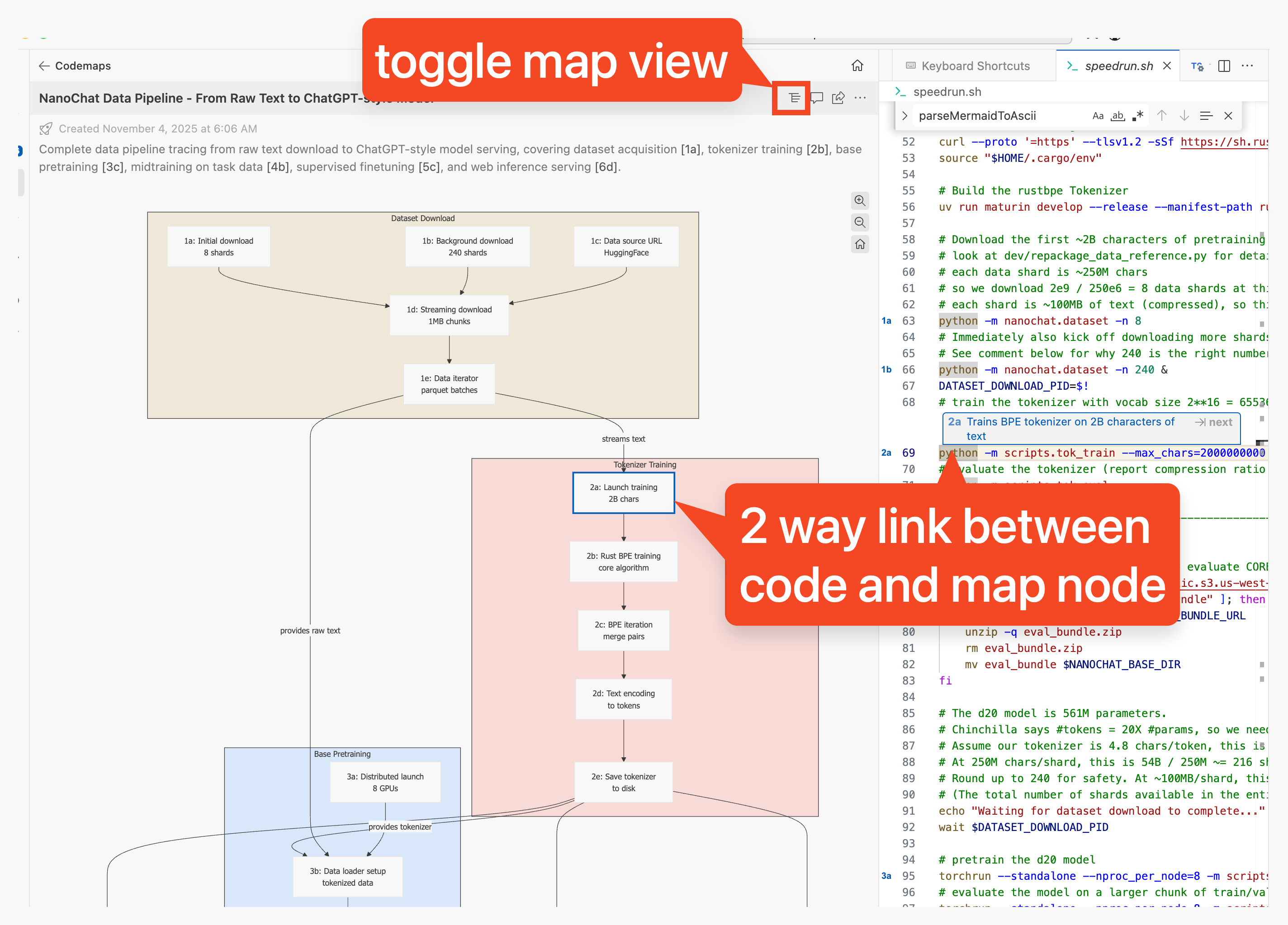

You can also toggle over to a visually drawn Codemap, which performs the same functions when you click on individual nodes: they send you to the exact part of the codebase you clicked on.

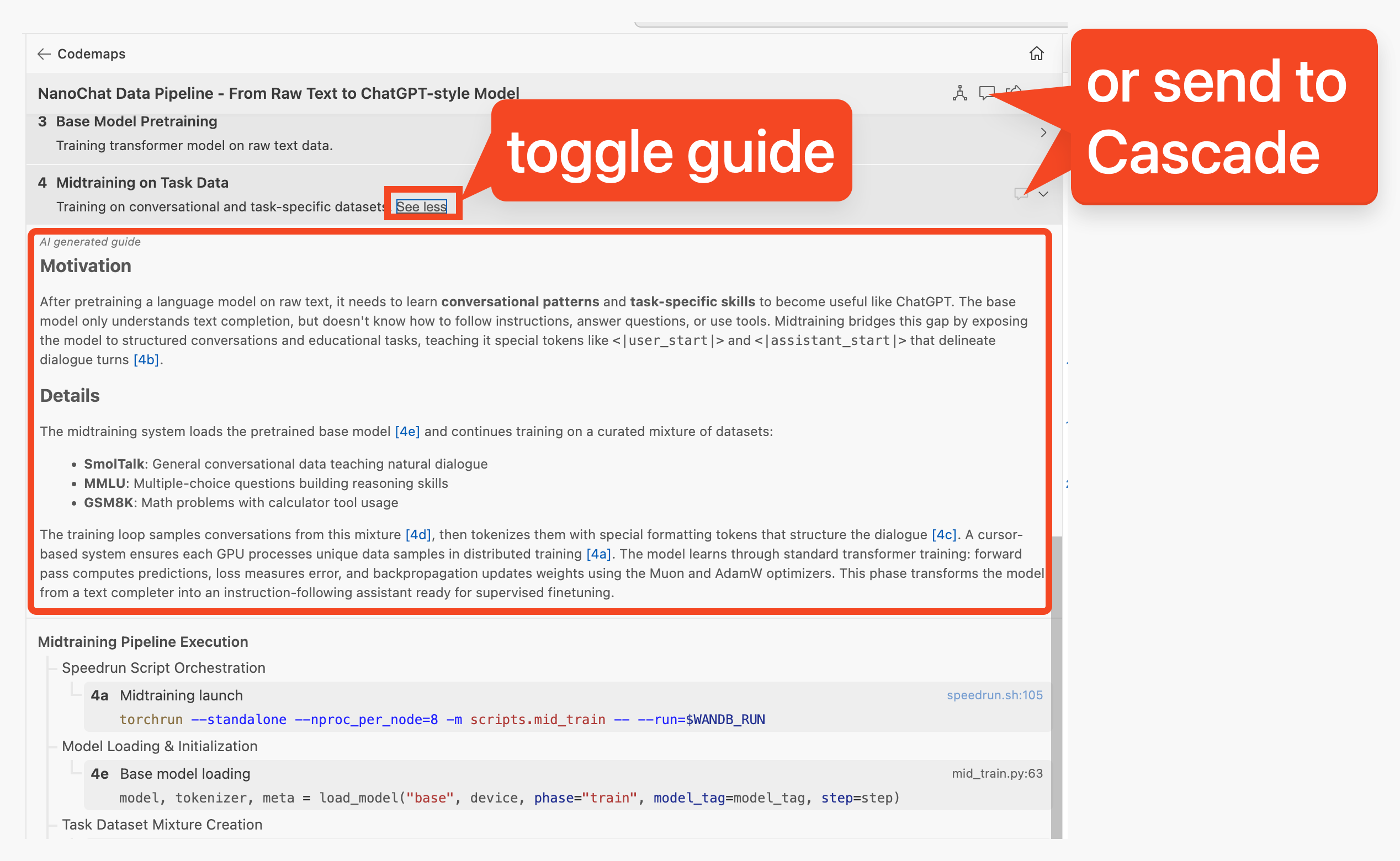

However, if you want a little more context, then you can hit “See more” in any section to expand our “trace guide” that gives a more descriptive explanation of what groups the discovered lines together.

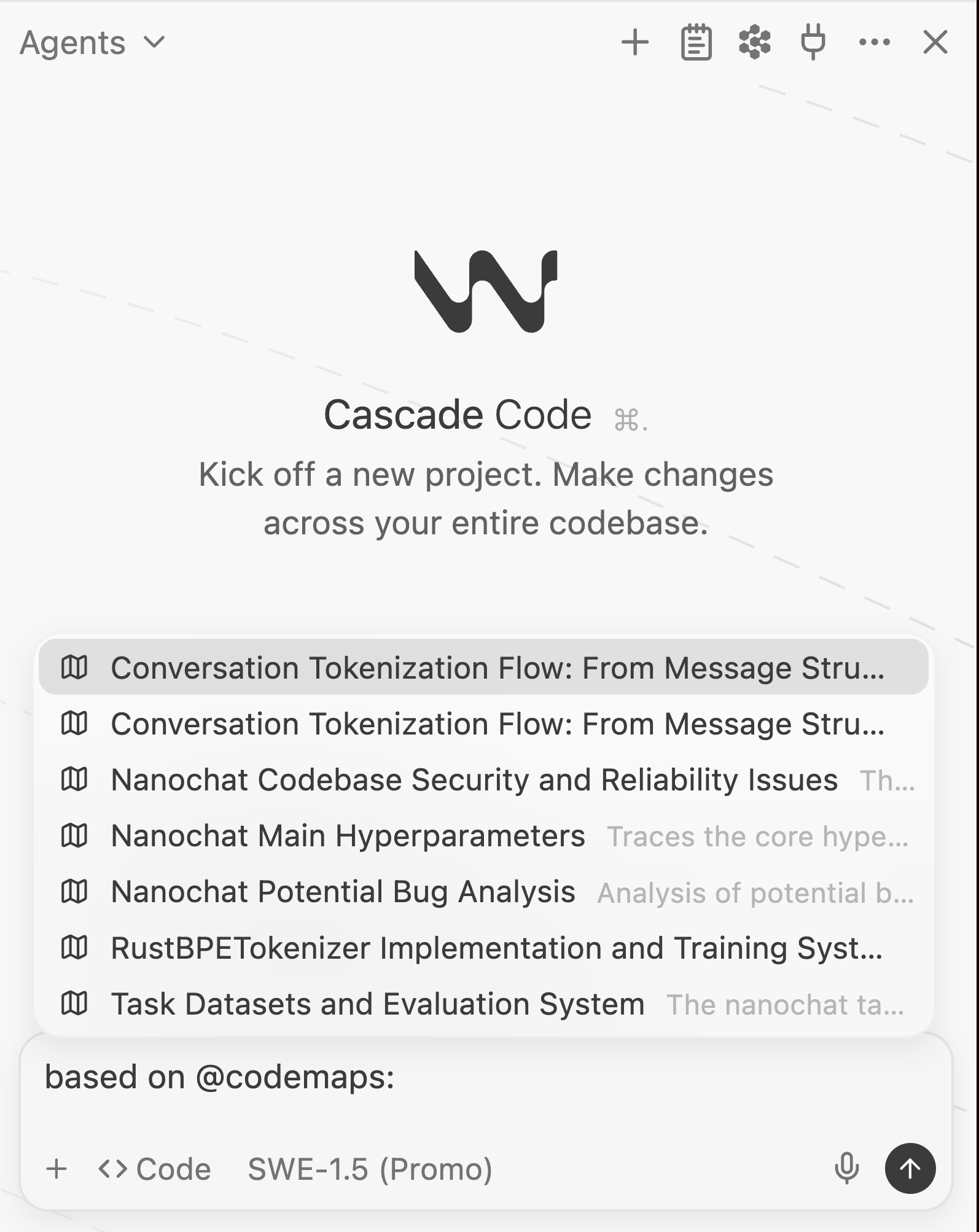

Finally, inside Cascade you can also reference a codemap for the agent with @{codemap} (all of it, or a particular subsection) in your prompt to provide more specific context and dramatically improve the performance of your agent for your task.

Fight back against Vibeslop

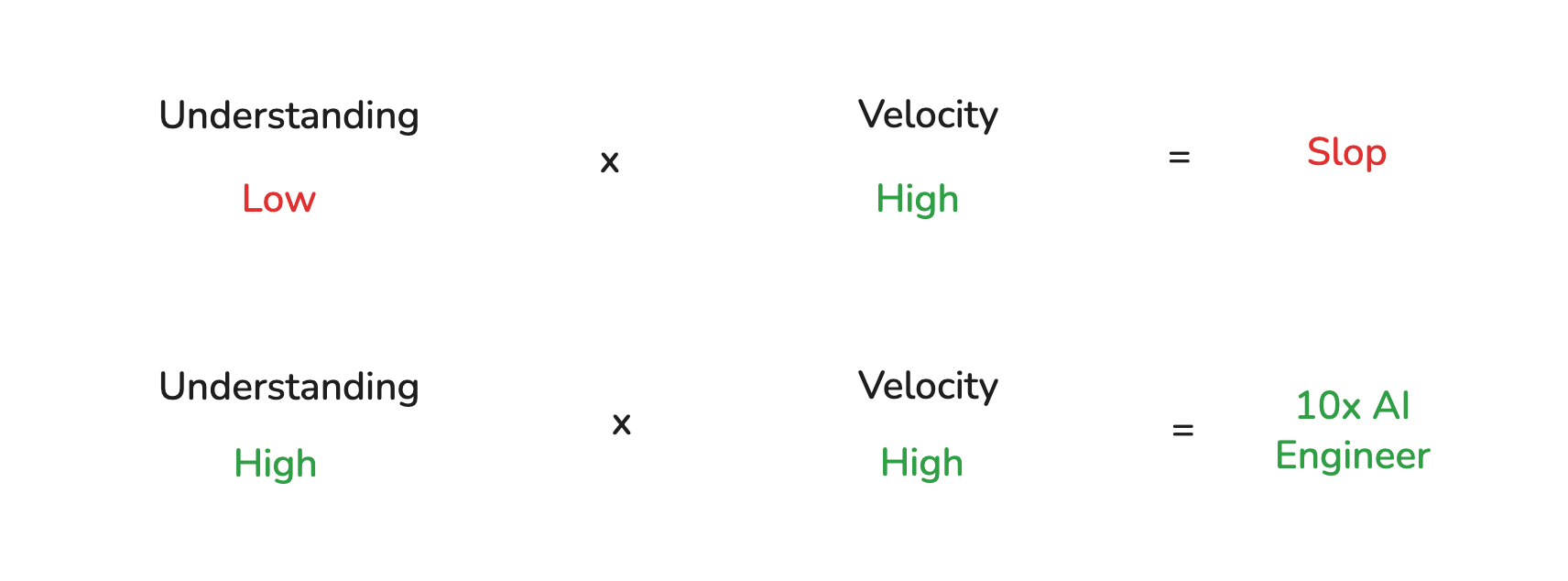

We feel that the popular usage of “vibe coding” has strayed far from the original intent, into a blanket endorsement of plowing through any and all AI generated code slop. If you look at the difference between the most productive vs the problematic AI-assisted coders, the productive ones can surf the vibes of code that they understand well, whereas people get into trouble when the code they generate and maintain starts to outstrip their ability to understand it.

To understand is to be accountable. As AI takes on more of the easy work, the hard problems left to humans are the ones that demand real comprehension: debugging complex systems, refactoring legacy code, making architecture decisions. In this new era, the engineer’s role shifts from authoring to accountability — you might not write every line, but you’re still responsible for what ships. That accountability depends on understanding what the AI produced, why it changed, and whether it’s safe. Codemaps closes that gap by giving both the human and the AI a shared picture of the system: how it’s structured, how data flows, where dependencies live. Codemaps is our latest Fast Agent, but as we discussed in the Semi-Async Valley of Death, our goal isn't just about speed, it is to help your human engineers stay in flow, stay on top of their code, and to move faster and more confidently on the hardest problems, never shipping slop that they don't understand.

Augment engineers for high value work, relieve them of low value work. The other local minima that the coding agent industry has gotten stuck in is in the general messaging of replacing engineers for low value work and not having any solutions for the hardest tasks apart from “pls ultrathink high, no mistakes”, which only gives autonomy to the agent, at the expense of the engineer. The long history of human-machine collaboration teaches us that we can always do more with the synergy rather than humans-alone or AI-alone. Our view is that the AI product that engineers will love most is the one that makes them better at their job, not the one that tries to replace them with a sloppy facsimile of themselves.

What’s Next

With Codemaps, we are now exposing to humans some of the indexing and analysis we do inside of our coding agents. These artifacts are sharable today across teams for learning and discussion, but we have yet to benchmark how much better they can make our coding agents like Devin and Cascade in solving challenging tasks on their own. We also see opportunities for connecting and annotating codemaps, as well as defining an open .codemap protocol that can be used by other code agents and custom tooling built by you. Complementing our Fast Context feature, this is an advancement in human-readable automatic context engineering.

You can try Codemaps on the latest versions of Windsurf, or DeepWiki!