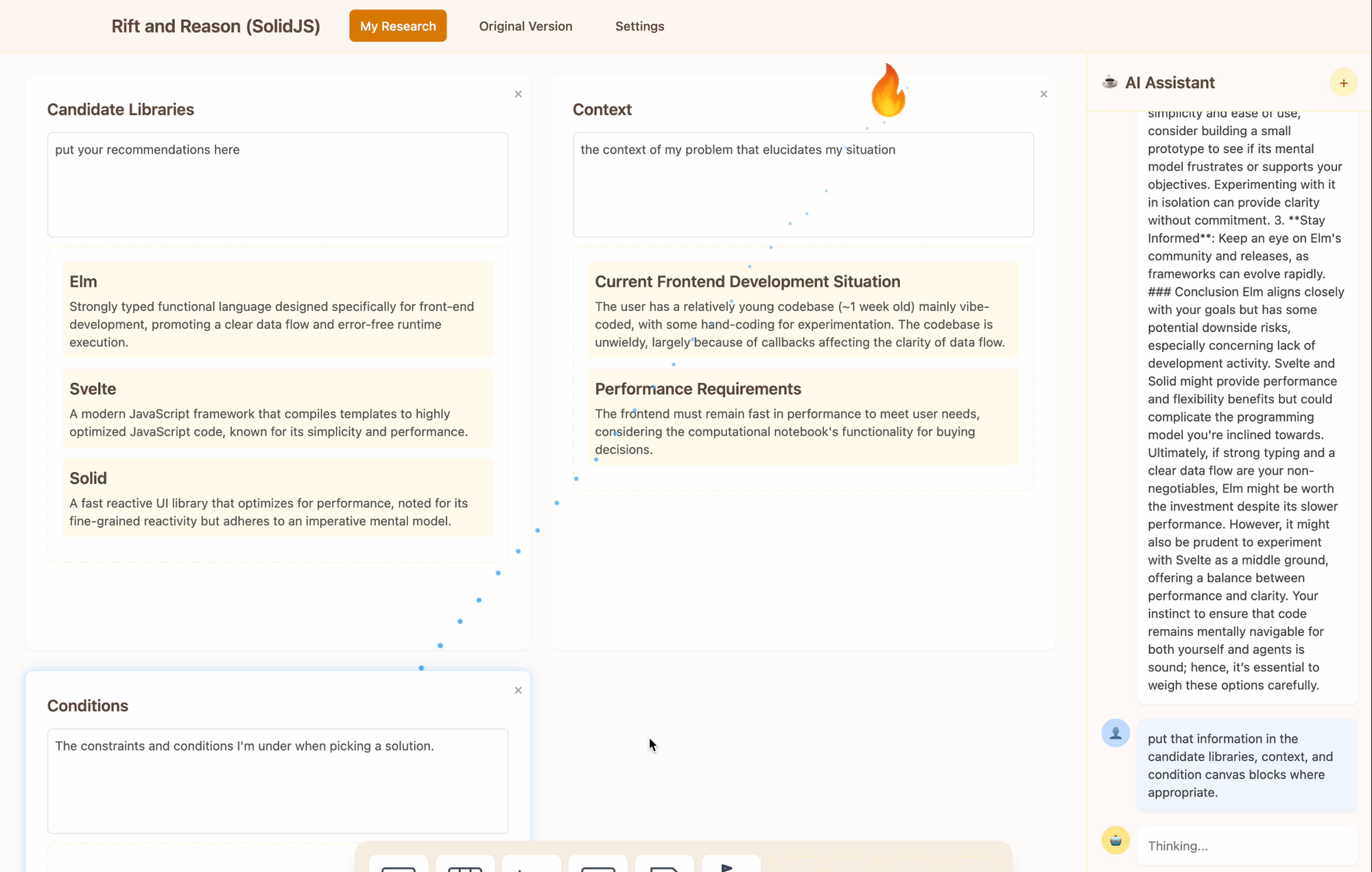

I ended up deciding on SolidJs. For one, I spent way too much time deliberating on a choice that wouldn't matter as much at the end of the day. But I think I wanted to give functional programming a good shake, since it's aligned with my values and ideals. However, at the end of the day I couldn't choose Elm, because of the friction over ports, and that it forces you to draw boundaries at when you have side effects. It's not conducive to computational flows that is interspersed with side effects, but is a single complete idea.

Two main risk factors that I eliminated last week.

- The stack for implementing free-floating avatars.

- Multi-tool use in a single cycle

I didn't want to have the AI feel like it's constrained to a sidebar, so I wanted to experiment with whether it can have an avatar that exists in the same digital space of the artifact as you, the user. This is still a left-over sentiment from my experiment with tool-based manipulation. While there is an advantage to having a chat history, I don't think it should be the default. I want the default to be direct-manipulation, not just for the user, but for the AI.

Between absolutely positioned DOMs and using the canvas for an avatar, I opted for the latter. Mostly for flexibility of effects. I wasn't sure how canvas and DOM could communicate, but there are query APIs provided by the browser that enabled me to build functions like, "move to this DOM" or "shoot at this DOM". LLM Vibe coding helped a lot to surface these APIs that I wasn't aware of before.

The avatar for the AI is the flame, and when it manipulates the canvas blocks it shoots a particle at it. The graphics aren't final, but I wanted to see how it felt to have something on the screen manipulate things. Generally, I think it feels good, but it's a balance between visual feedback and distraction. It still needs a lot of polish.

One of the issues was that my agent tended to stop after a single tool use. Sometimes, after two. Sometimes, it repeats the same tool use over and over again. So I didn't know whether it was an inherent limitation, or that it's an implementation issue. Surprise! It's an implementation issue.

First, I wasn't inserting the results of the tool call in the message history, so the LLM thought that a tool use ended up being a no-op, so it tried again. Easy fix.

Second, my prompt wasn't good enough. I fired up o3, described what I was trying to do, as well as my current prompt. It was able to improve my prompt to be more explicit in ways that I wouldn't have thought to be more explicit. Sometimes, just getting the LLM to generate prompts for itself (or simpler models) has been a big unlock for me when using these things.

I have re-done what kinds of canvas blocks are available, and that ended up being an interesting thing in itself. I'll have to finish it this week.

Here's what I looked at this week:

- P1: I don’t use RAG, I just retrieve documents – Hamel's Blog #[[Retrieval Assisted Generation]]

- Memory Safety is Merely Table Stakes | USENIX #[[Foreign Function Interface]]

- The Prompt Engineering Playbook for Programmers #[[Prompt Engineering]]

- MCP is eating the world—and it's here to stay #[[Model Context Protocol]]

- Running a million-board chess MMO in a single process · eieio.games #[[Postmortem Engineering]]

- Custom Elements in Elm – Dropbox Paper #[[Elm]]