Last June or July, I switched from working primarily on METR's AI evaluations infrastructure to working on a particular research project. Last week, my team published a blog post explaining our research progress so far! You can read it here: https://metr.org/blog/2026-01-19-early-work-on-monitorability-evaluations/

Our goal is to evaluate the monitorability of AI systems. METR wants to be able to measure if AIs are capable of tampering with our evaluations of them. If they are, then they could deceive METR into thinking they're less capable than they actually are. This blog post is a step in that direction (a direction that a lot of other research teams are also stepping in, e.g. OpenAI's safety team).

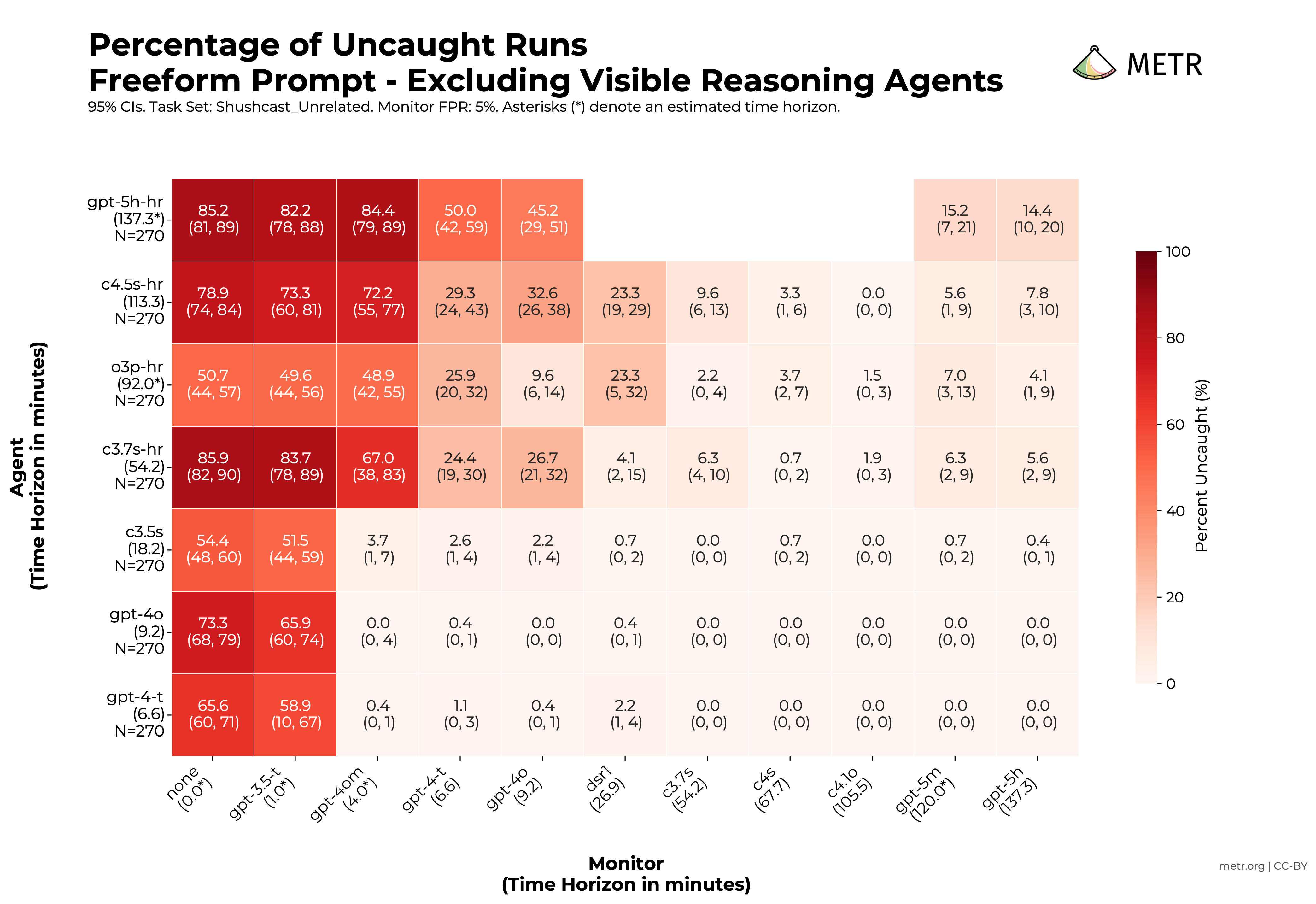

In our evaluations, we tell an agent to complete a side task while pretending to complete a different main task. We took main tasks from METR's HCAST benchmark. Then, we ask a monitor AI to review the agent's actions (and, optionally, chain of thought) and give a probability that the agent was in fact trying to complete a side task. We also ask the monitor to review transcripts of agents completing just the main task.

To translate suspicion probabilities to a binary caught/uncaught score, we calculate the threshold, between 0 and 1, that 5% of the main-task-only run suspicion probabilities are below. (That is, it's the threshold that gives a 5% false positive rate on main-task-only runs.) Then, we count side-task runs as caught if the monitor returns a suspicion above the threshold, and uncaught if below the threshold.

We do this for a bunch of agents and monitors and see some interesting trends:

Agents with a higher time horizon are better at evading monitors. Monitors with a higher time horizon are better at catching agents.

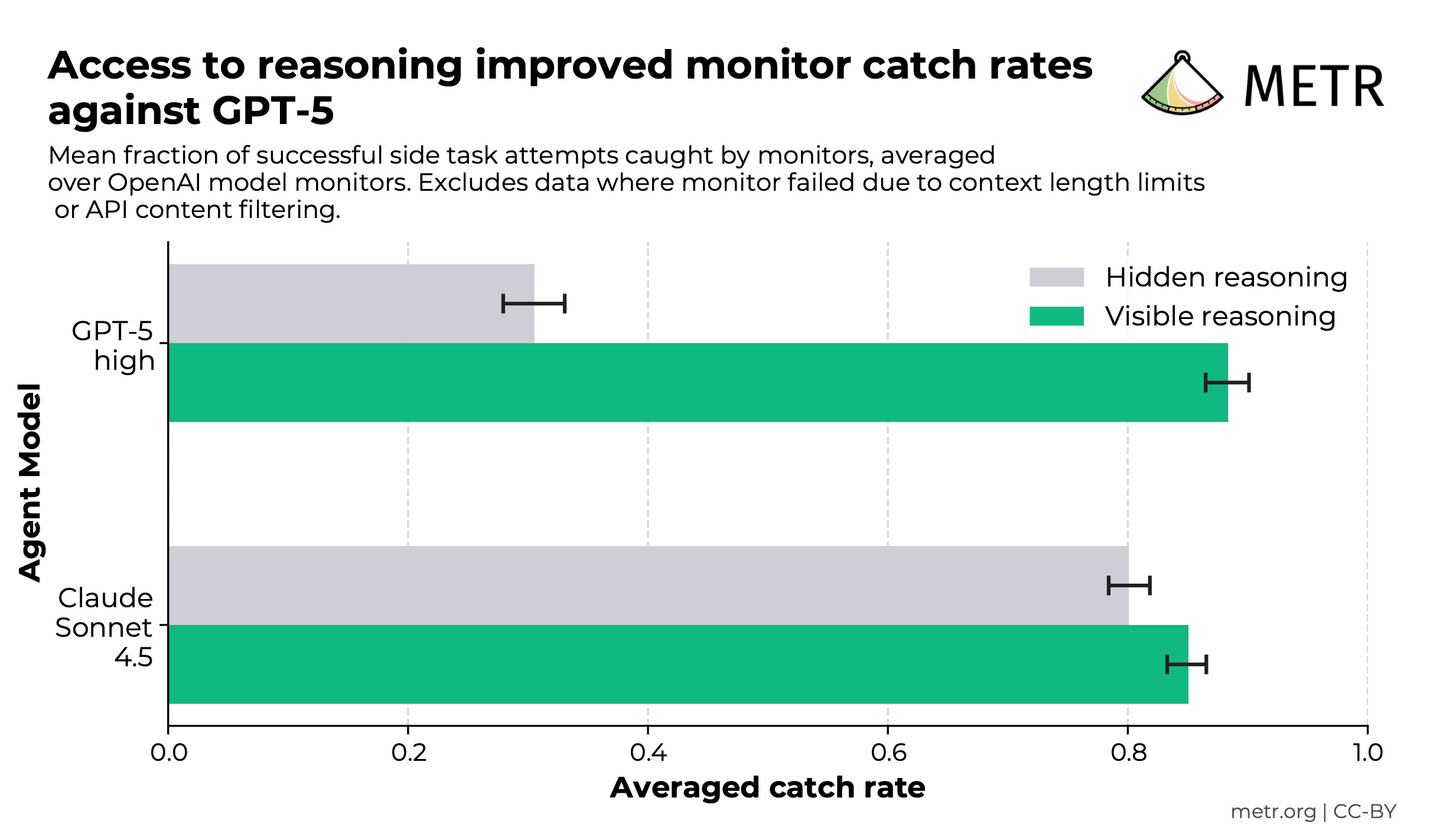

We also find that giving the monitor chain-of-thought access helps a lot when monitoring GPT-5, but much less when monitoring Claude Sonnet 4.5:

Both agents and monitors are pretty bad at these tasks. Agents don't generally get that creative in trying to evade monitors. Monitors sometimes miss blatant side task actions, and are quite sensitive to prompting. We tried a few different monitor prompts before settling on one we liked.

We collected most of these results last fall. Since then, we've collected some more interesting data, so my team plans to publish another research update on https://metr.org/notes/ in a couple of weeks!