GitClear published AI Copilot Code Quality and discovered it via The PrimeTime YouTube channel.

The paper focuses on the less discussed topic of software maintainability, in contrast to the more frequently discussed discourse on the internet: boosting developer productivity.

Abstract

The data in this report contains multiple signs of eroding code quality. This is not to say that AI isn’t incredibly useful. But it is to say that the frequency of copy/pasted lines in commits grew 6% faster than our 2024 prediction. Meanwhile, the percent of commits with duplicated blocks grew even faster. Our research suggests a path by which developers can continue to generate distinct value from code assistants into the foreseeable future.

Key Points:

The sharp upward curve of AI adoption seemingly guaranteed that, if the identified trends were really correlated with AI use, they would get worse in 2024. That led us to predict, in January 2024, that the annual Google DORA Research (eventually released in October 2024) would show “Defect rate” on the rise. Fortunately for our prediction record, unfortunately for Dev T eam Managers, the Google data bore out the notion that a rising defect rate correlates with AI adoption.

- The rise of AI code assistants correlates with an increase in bugs.

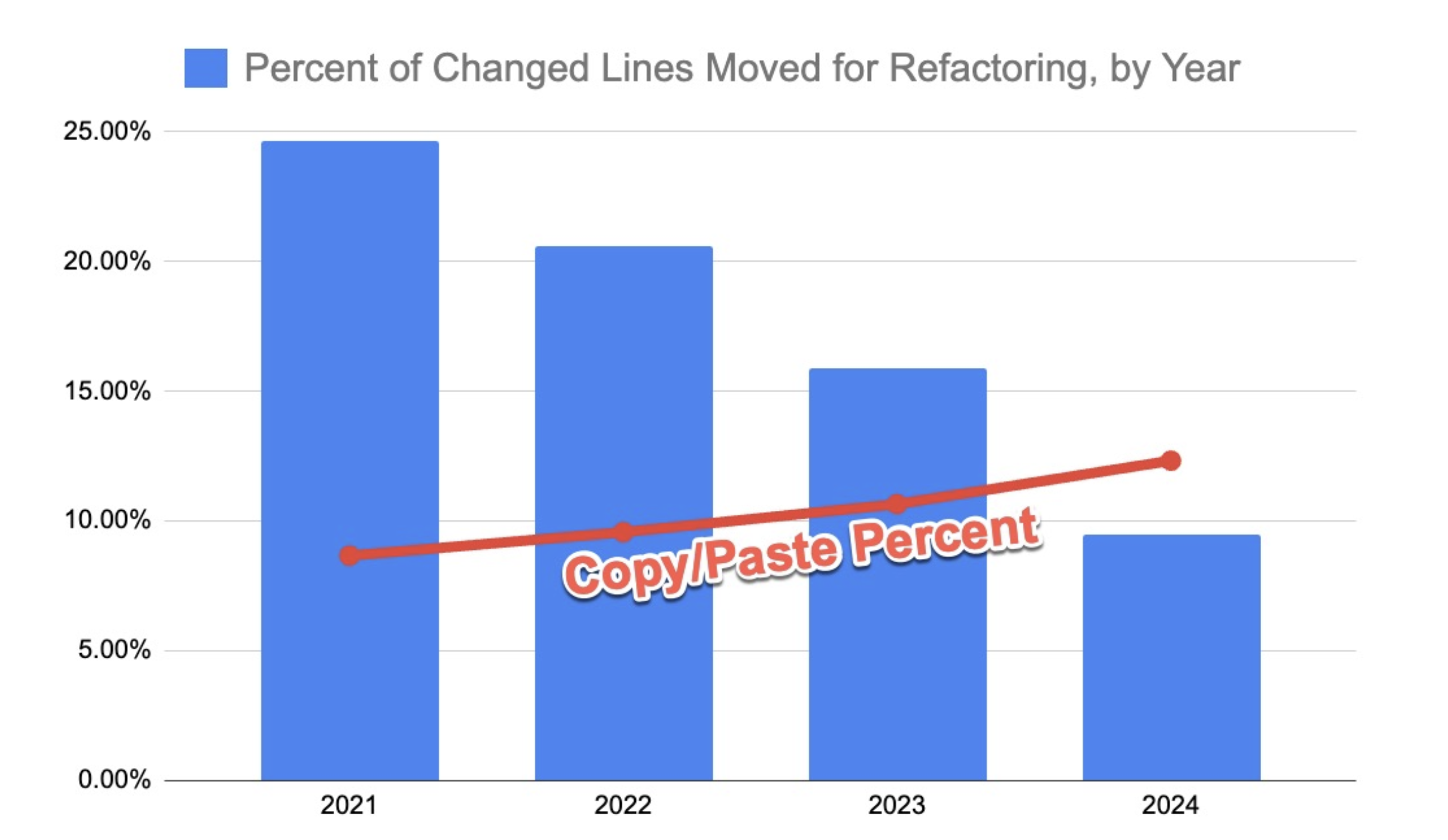

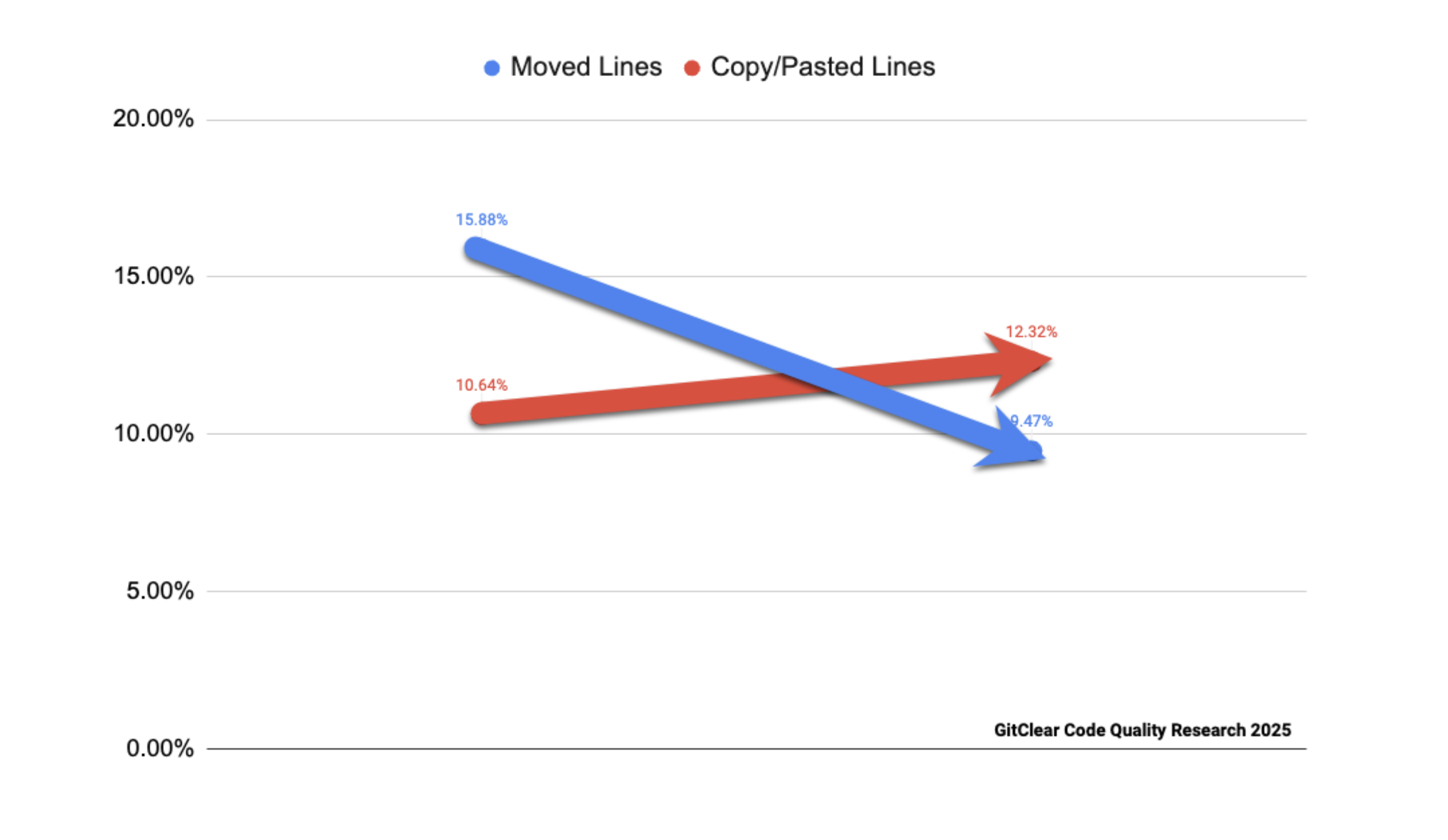

2024 marked the first year GitClear has ever measured where the number of “Copy/Pasted” lines exceeded the count of “Moved” lines. Moved lines strongly suggest refactoring activity. If the current trend continues, we believe it could soon bring about a phase change in how developer energy is spent, especially among long-lived repos. Instead of developer energy being spent principally on developing new features, in coming years we may find “defect remediation” as the leading day-to-day developer responsibility.

This suggests that developers prioritize shipping code, demonstrating impact, contributing to FOSS, and experiencing a sense of productivity. However, they are focusing less on refactoring and creating general, reusable code. I would like to know how maintainers feel about this trend in contributions and the quality of pull requests. If AI can generate code quickly, then there must also be efforts to develop tools that enhance code quality.

Even when managers focus on more substantive productivity metrics, like “tickets solved” or “commits without a security vulnerability, ” AI can juice these metrics by duplicating large swaths of code in each commit. Unless managers insist on finding metrics that approximate “long-term maintenance cost, ” the AI-generated work their team produces will take the path of least resistance: expand the number of lines requiring indefinite maintenance.

This perspective resonates well—at higher levels within an organization, key metrics often revolve around increasing profits, accelerating feature deployment, and minimizing incidents and bugs. Discussions about code quality are comparatively less common.

The combination of these trends leaves little room to doubt that the current implementation of AI Assistants makes us more productive at the expense of repeating ourselves (or our teammates), often without knowing it. Instead of refactoring and working to DRY (“Don’t Repeat Yourself”) code, we’re constantly tempted to duplicate.

The process has become easier, as assistants and agents can now generate code,

edit files, and write test cases.

I experimented with Cline, a VS Code extension,

and found that a well-structured, detailed prompt can produce code remarkably quickly.

An interesting observation is that most AI benchmarks focus on solving LeetCode

problems and GitHub issues, yet no benchmark currently exists to assess code quality and maintainability.

The process has become easier, as assistants and agents can now generate code,

edit files, and write test cases.

I experimented with Cline, a VS Code extension,

and found that a well-structured, detailed prompt can produce code remarkably quickly.

An interesting observation is that most AI benchmarks focus on solving LeetCode

problems and GitHub issues, yet no benchmark currently exists to assess code quality and maintainability.

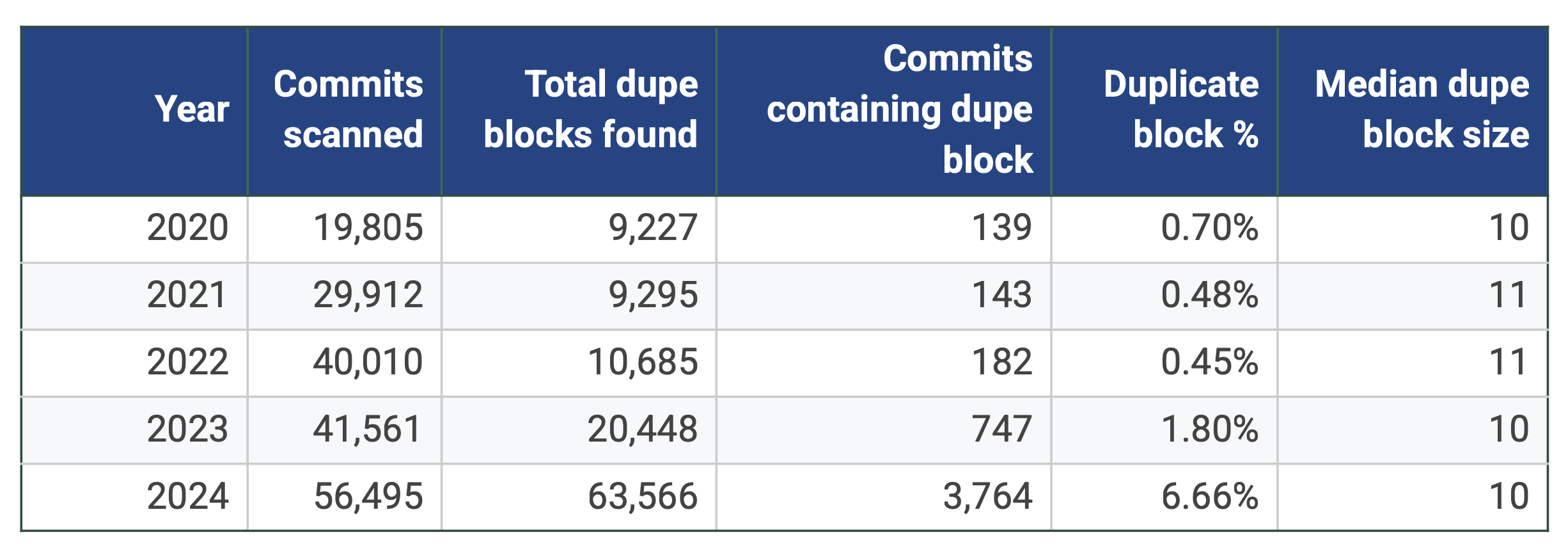

According to our duplicate block detection method [A8], 2024 was without precedent in the likelihood that a commit would contain a duplicated code block. The prevalence of duplicate blocks in 2024 was observed to be approximately 10x higher than it had been two years prior.

AI-generated suggestions are free and quick to obtain.

Google DORA’s 2024 survey included 39,000 respondents–enough sample size to evaluate how the reported AI benefit of “increased developer productivity” mixed with the AI liability of “lowered code quality. ” That research has since been released, with Google researchers commenting: AI adoption brings some detrimental effects. We have observed reductions to software delivery performance, and the effect on product performance is uncertain.

But the 2024 ratios for “what type of code is being revised” do not paint an encouraging picture. During the past year, only 20% of all modified lines were changing code that was authored more than a month earlier. Whereas, in 2020, 30% of modified lines were in service of refactoring existing code.

This trend implies that new pull requests are often created to fix issues introduced by previous pull requests.

The trend line here is a little cagey, with 2023 faking a return toward pre-AI levels. But if we consider 2021 as the “pre-AI” baseline, this data tells us that, during 2024, there was a 20-25% increase in the percent of new lines that get revised within a month.

This raises an important question about finely crafted. Are new developers actively thinking about improving their craft? I have observed engineers with ambitions to write compilers, design new programming languages, or even rewrite the TCP stack in Rust.

The never-ending rollout of more powerful AI systems will continue to transform the developer ecosystem in 2025. In an environment where change will be constant, we would suggest that developers emphasize their still-uniquely human ability to “simplify” and “consolidate” code they understand. There is art, skill and experience that gets channeled into creating well-named, well-documented modules. Executives that want to maximize their throughput in the “Age of AI” will discover new ways to incentivize reuse. Devs proficient in this endeavor stand to reap the benefits.

That observation aligns with my previous point.

Conclusion

The report also provides a use case for companies to adopt GitClear. I find it worthwhile to consider the long-term advantages and effects of AI coding assistants.

A couple of months ago, I was following Crafting Interpreters and using VS Code to write Go code. I had to disable Copilot so that I could fully understand what was happening in the codebase and avoid ten lines of auto-completion.

My personal take is that most of these tools primarily offer suggestions, with little to no emphasis on leveraging existing code within the codebase to achieve coherence. This lack of contextual awareness is one of the contributing factors. While these tools may help rank pull request quality, detect duplication, and assess other metrics, unless they are integrated into the development process early to reuse existing utility functions or suggest refactoring of existing code, the problem will persist.

It is also worth considering whether to deploy LLMs fine-tuned to a specific codebase with the goal of improving code quality by providing suggestions that prioritize reusability and maintainability. However, the cost of maintaining and updating LLMs remains a significant challenge.

Another consideration is to use a reasoning model to determine whether to analyze the codebase and offer suggestions or generate new code.