1 Nvidia 2 Cornell University 3 National Taiwan University

We optimize adaptive sparse voxels radiance field from multi-view images without SfM points. The fly-through videos are rendered by our SVRaster in >100 FPS.

Overview

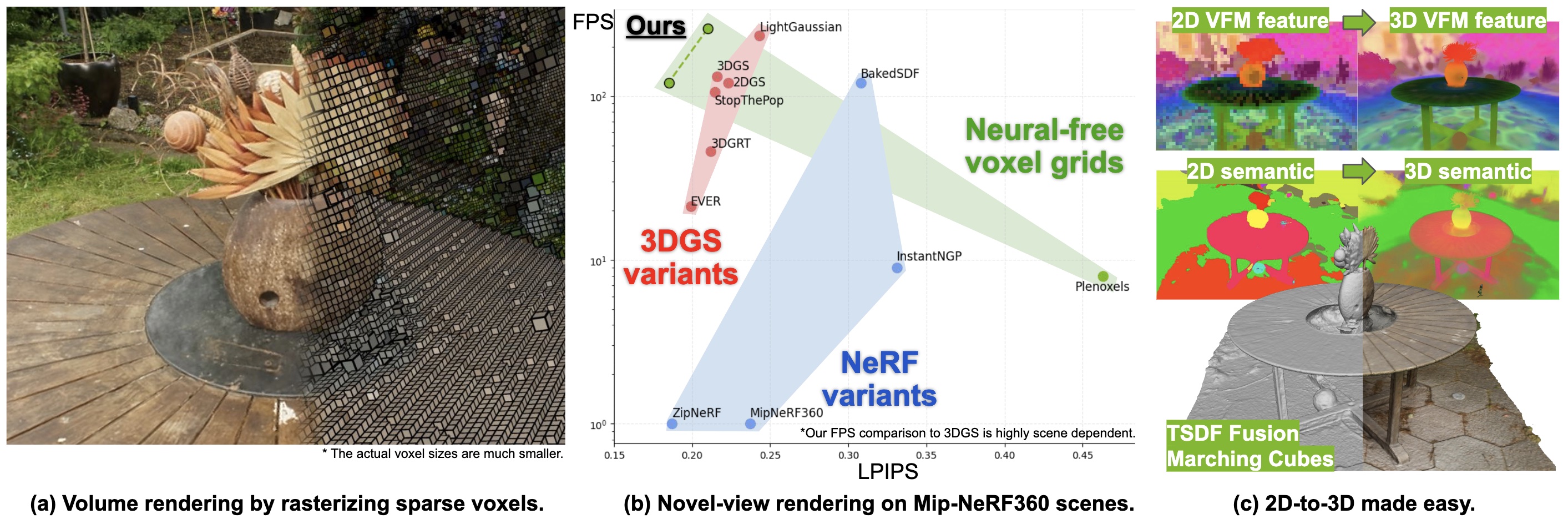

We propose an efficient radiance field rendering algorithm that incorporates a rasterization process on adaptive sparse voxels without neural networks or 3D Gaussians. There are two key contributions coupled with the proposed system. The first is to adaptively and explicitly allocate sparse voxels to different levels of detail within scenes, faithfully reproducing scene details with 655363 grid resolution while achieving high rendering frame rates. Second, we customize a rasterizer for efficient adaptive sparse voxels rendering. We render voxels in the correct depth order by using ray direction-dependent Morton ordering, which avoids the well-known popping artifact found in Gaussian splatting. Our method improves the previous neural-free voxel model by over 4db PSNR and more than 10x FPS speedup, achieving state-of-the-art comparable novel-view synthesis results. Additionally, our voxel representation is seamlessly compatible with grid-based 3D processing techniques such as Volume Fusion, Voxel Pooling, and Marching Cubes, enabling a wide range of future extensions and applications.

Adaptive Sparse Voxel Representation and Rendering

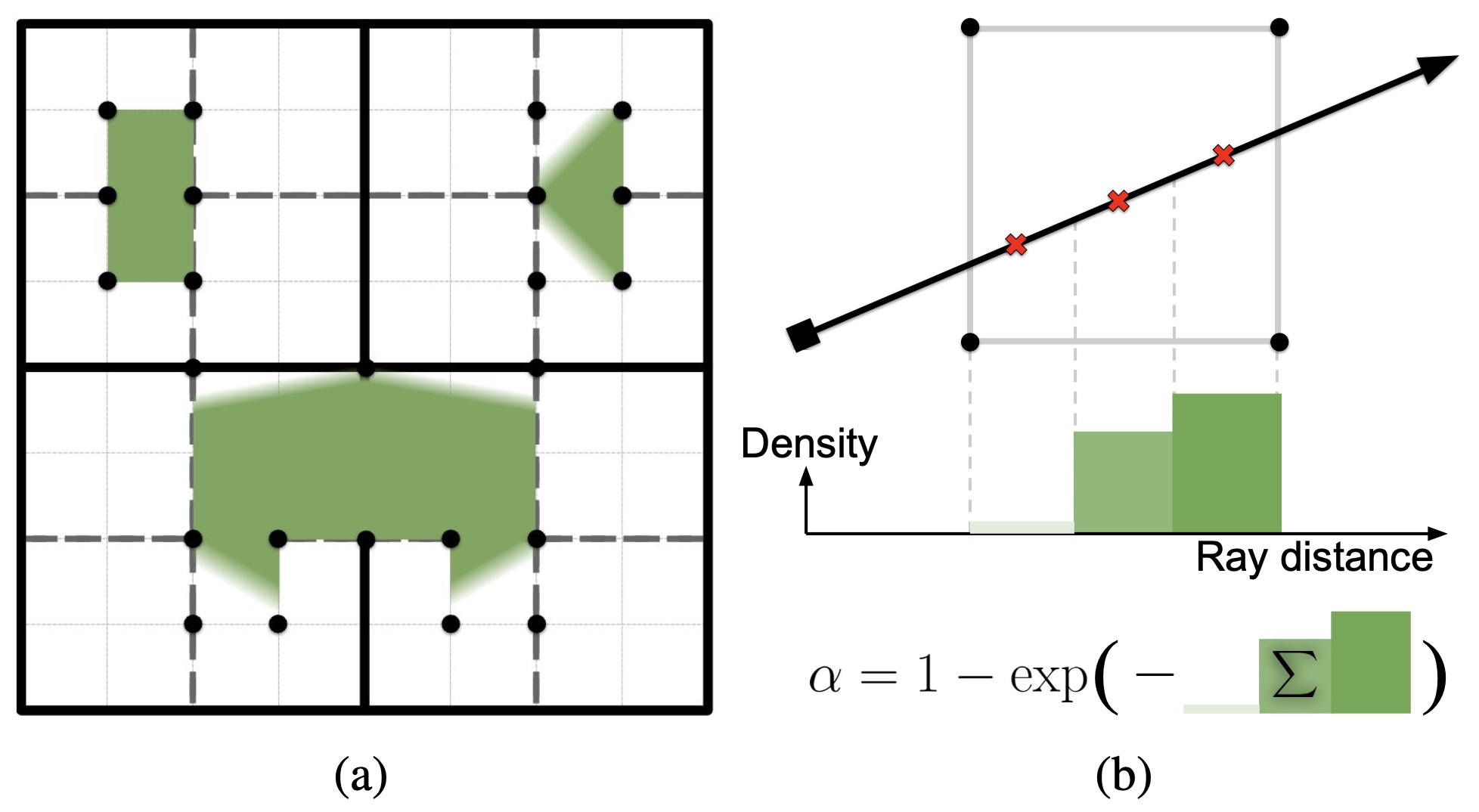

Our scene representation is a hybrid of primitive and volumetric model. (a) Primitive component. We explicitly allocate voxels primitives to cover different scene level-of-details under an Octree layout. Note that we do not replicate a traditional Octree data structure with parent-child pointers or linear Octree. We only keep voxels at the Octree leaf nodes without any ancestor nodes. (b) Volumetric component. Inside a voxel is a volumetric (trilinear) density field and a (constant) spherical harmonic field. We sample K points on the ray-voxel intersection segment to compute the intensity contribution from the voxel to the pixel with numerical integration.

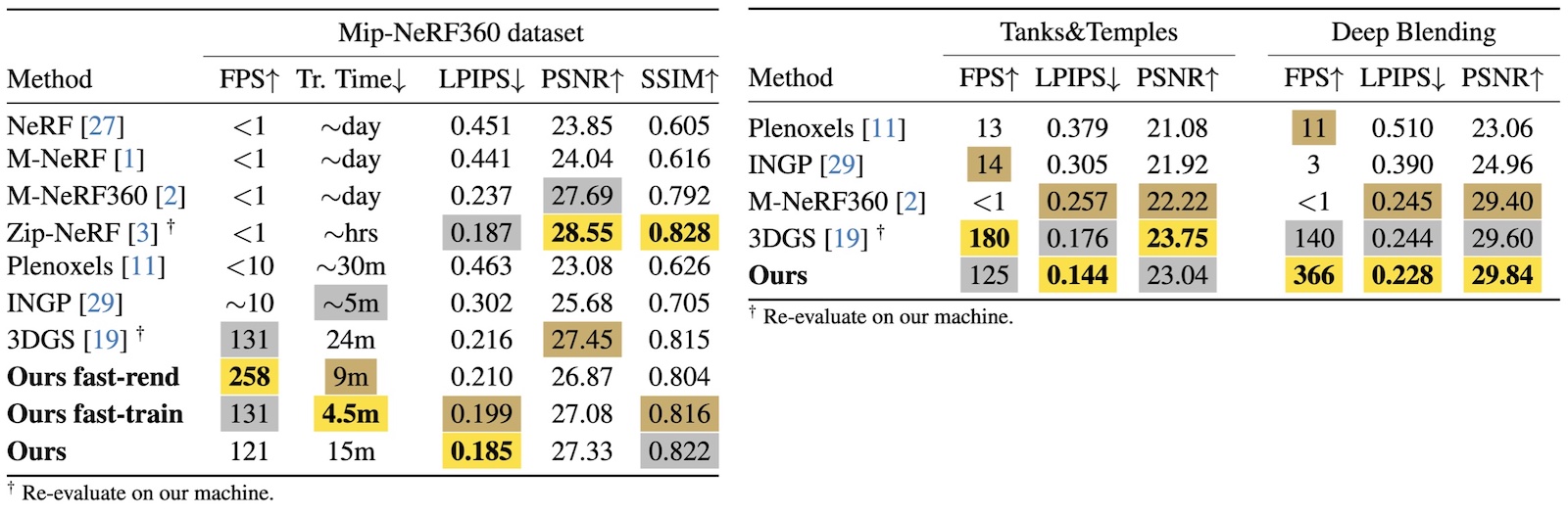

Novel-view Synthesis Results

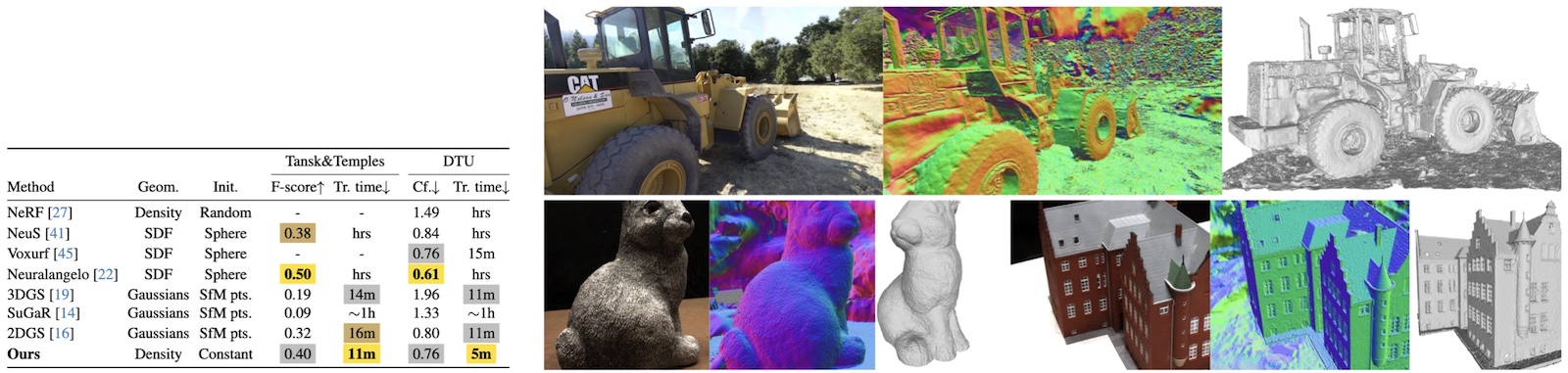

Adaptive Sparse Voxel Fusion

Fusing 2D modalities into the trained sparse voxels is efficient. The grid points simply take the weighted from the 2D views following classical volume fusing method. We show several examples in the following.

Rendered depths → Sparse-voxel SDF → Mesh

BibTeX

@article{Sun2024SVR,

title={Sparse Voxels Rasterization: Real-time High-fidelity Radiance Field Rendering},

author={Cheng Sun and Jaesung Choe and Charles Loop and Wei-Chiu Ma and Yu-Chiang Frank Wang},

journal={ArXiv},

year={2024},

volume={abs/2412.04459},

}